4 Minuten

The Challenge of Spatial Audio on Headphones

The rise of virtual and augmented reality applications has sparked renewed interest in binaural audio – sound designed specifically for headphone listening. Yet converting existing multichannel electroacoustic compositions to work effectively through headphones remains a complex undertaking that involves both technical precision and artistic sensitivity.

The Sounding Future platform's 3D AudioSpace exemplifies this challenge. This innovative streaming platform allows artists worldwide to showcase their spatial compositions in high-quality binaural 3D audio, making immersive sound accessible to global audiences without specialized hardware. However, the process of adapting these works for headphones reveals fundamental issues with binaural reproduction that have persisted for decades.

Why Headphones Sound Different

Despite technological advances, headphone playback still faces inherent perceptual problems that don't exist with natural hearing or loudspeaker systems.

In-Head Localization occurs when sounds appear to originate inside the listener's head rather than from external sources. This phenomenon exists on a continuum rather than as a binary state, significantly affecting the listening experience.

Front-Back Confusion prevents listeners from distinguishing whether sounds come from in front or behind them. In natural listening, subtle head movements normally resolve these ambiguities through changing time differences between front and rear sounds.

The Room Divergence Effect creates perceptual conflict when binaural acoustic information suggests one environment while visual and proprioceptive inputs indicate another – such as sitting in your living room while hearing a concert hall.

These issues result in an unnaturally wide soundstage where sources appear predominantly from the sides, while frontal sounds fail to externalize properly and instead seem localized within the head.

Technical Solutions and Their Limitations

Several approaches can address these challenges, though each comes with practical constraints.

Personalized Head-Related Transfer Functions (HRTFs) accurately model individual ear, head, and torso reflections. However, the extensive measurement procedures remain time-consuming and complex, limiting widespread adoption.

Head tracking can partially mitigate front-back confusion by keeping sound sources stationary as the listener's head moves, reducing in-head localization. While some headphones now include this technology, it's not universally available.

Binaural virtualization represents perhaps the most practical approach. This method acoustically virtualizes loudspeakers within a room environment, modeling real loudspeaker behavior in listening spaces. Adding reverberant acoustic information to otherwise dry sounds significantly improves localization by providing additional spatial cues that situate sounds within an acoustic context.

The Virtualization Dilemma

Implementing virtualization raises fascinating questions about artistic intent. Should the ideal listening environment be an anechoic chamber with no reflections? While studio monitors are optimized in such spaces, listening to music in completely reflection-free environments creates its own problems.

This is especially true for Ambisonics – the format many electroacoustic composers work with. Ambisonics actually benefits from slight reverberation, which compensates for spatial aliasing caused by the mathematical truncation of spherical harmonics in practical implementations.

The question becomes: does adding virtual reverberation to compositions that already contain specific virtual spaces fundamentally alter the work? This parallels how any concert venue affects a piece's sound – something composers have always navigated, often unconsciously incorporating venue characteristics into their compositional process, think of early choral compositions for big cathedrals.

A Case for Ambisonics

Working with channel-based formats presents significant standardization challenges. Quadraphonic and octophonic configurations lack consistent channel numbering systems, and even established formats like 5.1 surround sound have multiple conventions with varying speaker angles.

In contrast, Ambisonics B-Format follows strict standards (AmbiX or the older FUMA format) for channel numbering and normalization. The speaker angles depend entirely on the playback configuration rather than being embedded in the format itself.

Converting channel-based compositions to Ambisonics offers several advantages: enabling playback on various loudspeaker arrays beyond the original configuration, allowing integration of Ambisonics-specific effects, and providing sweet spots that can accommodate larger audiences in higher-order implementations.

The Conversion Process

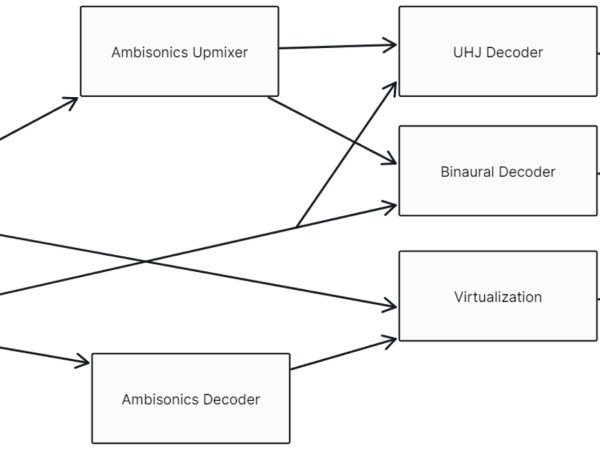

The AudioSpace platform offers three distinct formats, each serving different listening scenarios:

Stereo versions use the Ambisonics UHJ format, a hierarchical encoding system from the 1970s that provides improved stereo representation while maintaining mono compatibility.

Binaural conversion employs specialized decoders that transform Ambisonics audio into two-channel headphone-optimized audio using frequency-dependent matrices derived from HRTFs. The matrix combines the spatial information contained in the Ambisonics channels with HRTF data that captures how sound is naturally modified by the head, torso and ears.

Binaural+ adds virtual room acoustics to the conversion process. After extensive testing, the Applied Psychoacoustic Lab's Virtuoso plugin was selected for its flexibility and comprehensive functionality. The virtual environment uses APL's critical listening room model, which complies with ITU-R BS.1116-3 recommendations – providing good spatial impression without excessive acoustic reflections.

Practical Implementation Insights

The conversion workflow requires careful consideration of decoding methods and speaker configurations. For Ambisonics compositions processed through virtualization, the 26-point Lebedev design was chosen as the virtual speaker array due to its high speaker density and standard distribution pattern.

Channel-based formats required transcoding to Ambisonics before final conversion. Blue Ripple Sound's O7A Upmixers proved particularly effective, especially with their "Inferred" mode that interpolates space between encoded sources for more coherent virtual speaker array representation.

Interestingly, routing channel-based formats through Ambisonics often produced better results than direct matrix-based conversions, while also facilitating future implementation of dynamic conversion, head tracking, and streaming capabilities.

Looking Forward

As virtual and augmented reality applications continue driving binaural audio adoption, these conversion challenges will only grow in importance. The solutions developed for platforms like AudioSpace provide valuable frameworks for future implementations, while highlighting the complex interplay between technical capabilities and artistic expression in spatial audio.

The field still lacks standardized approaches to binaural conversion of multichannel compositions. However, projects like this contribute essential practical knowledge toward eventual industry standards, ensuring that the rich heritage of electroacoustic spatial music remains accessible to headphone listeners worldwide.

Link to the complete paper on ResearchGate.

Jakob Gille

Jakob Gille began his formal education at the HfM Dresden, where he studied composition and music theory. He is the driving force behind Into Sound, an initiative that has organized multiple concerts in Berlin for 3D loudspeaker setups since its inception in 2018. In his compositions and research he specializes in the work with Ambisonics and immersive Field Recordings. Currently, he is pursuing a master's degree in computer music and sound art at IEM Graz.

Article topics

Article translations are machine translated and proofread.

Artikel von Jakob Gille

Jakob Gille

Jakob Gille