3 Minuten

Musical performance involves a complex communication process between performer and audience, but until now, we haven't fully understood how emotional messages travel from musician to listener. New research combining audio analysis, real-time emotion tracking, and brain monitoring of solo instrumentalists has revealed the mechanisms behind this musical communication—and the results challenge common assumptions about performance.

The Expressiveness Finding

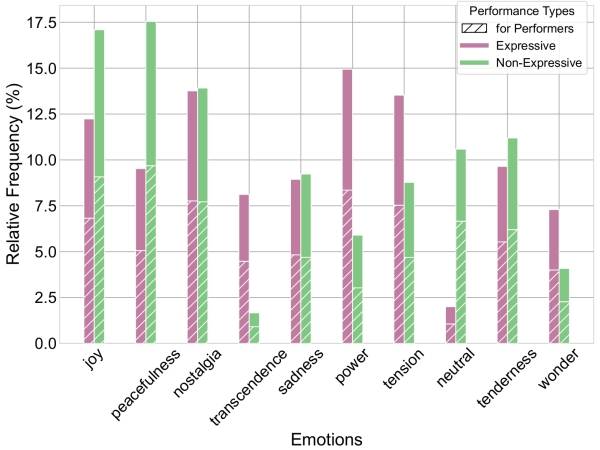

The key finding: expressive and improvisational performances create stronger emotional alignment between performers and audiences compared to technically accurate but non-expressive playing. When musicians played the same pieces both expressively and non-expressively, the emotional disconnect was measurable. Statistical analysis showed significant disagreement between performer intentions and audience perception during non-expressive performances, while expressive and improvisational conditions achieved high emotional alignment.

This isn't just about audience preference—it's about successful emotional transmission. When performers played expressively, high-arousal emotions like power and tension and high-valence emotions like transcendence became more prevalent, while neutral responses nearly disappeared.

Distribution of reported emotions. Comparison between Expressive and Non-Expressive performances.

An example of the music track Greensleeves played in an expressive manner.

And non-expressive manner:

The Acoustic Signature of Connection

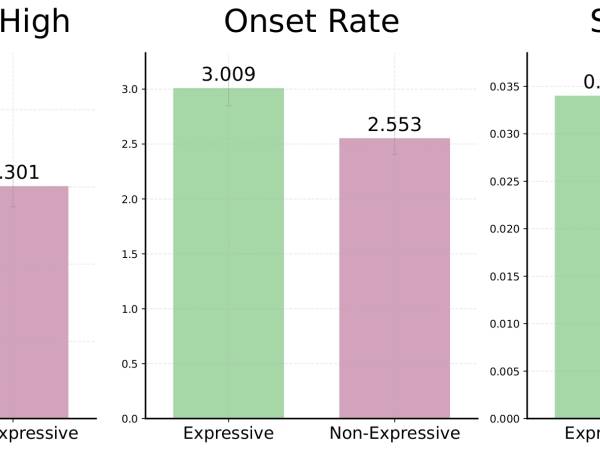

What makes expressive performance work? Audio analysis of over 200 recordings identified three key acoustic features that distinguish expressive from non-expressive playing:

Attack slope: Expressive performances feature sharper, more decisive note attacks. This creates clearer articulation that helps audiences follow the emotional narrative.

Onset rate: More frequent note events occur in expressive playing, not necessarily meaning faster tempos, but more nuanced rhythmic activity that maintains listener engagement.

Spectral flux: Greater timbral variation—the amount of change in sound color over time—provides richer emotional information for audiences to process.

Improvisation showed the highest attack slopes of all performance types, suggesting that spontaneous creation naturally incorporates these engagement-enhancing features.

Comparison of statistically significant acoustic features. Analysis between Expressive and Non-Expressive performances.

Technology Reads Musical Emotion

Machine learning models trained on this data achieved remarkable accuracy in predicting emotional responses. The models revealed that peaceful emotions correlate with low spectral flatness and minimal frequency variation—essentially, stable, tonal sounds register as calming across both performers and listeners.

Interestingly, performer and audience decision trees showed different complexity levels. Performers’ emotion prediction relied heavily on spectral features and their own physiological states, while audiences’ processed broader acoustic information including pulse clarity and spectral complexity.

Brain Patterns Reveal Creative States

EEG monitoring uncovered that improvisation produces distinct neural signatures. Alpha brain wave activity—associated with creativity and inhibition of conventional ideas—showed the smallest decrease from resting state during improvisational performances. This suggests improvising musicians enter a more relaxed, creatively open mental state that audiences can perceive.

Practical Applications

For performers: Focus on attack clarity, rhythmic nuance, and timbral variety rather than just technical accuracy. The data shows that emotional intention translates into measurable acoustic changes that audiences detect.

The research, conducted as part of the witheFlow project—which develops AI systems to augment human emotional expression in music—demonstrates that emotional communication in music follows predictable patterns. This opens possibilities for both enhanced performance training and technology that can assist musicians in achieving better audience connection.

The implications extend beyond performance technique. Understanding these mechanisms could improve music therapy applications, inform composition strategies, and enhance music education by providing objective measures of expressive communication.

witheFlow project

Musical emotion isn't mystical—it's measurable, teachable, and now, predictable.

Acknowledgments

The witheFlow project is implemented in the framework of H.F.R.I call “Basic research Financing (Horizontal support of all Sciences)” under the National Recovery and Resilience Plan “Greece 2.0” funded by the European Union –NextGenerationEU. We thank the audience participants for making this work possible.

This article from Vassilis Lyberatos and Spyridon Kantarelis is part of the paper selection of SMC25 - Sound and Music Computing Conference & Summer School 7-12 July 2025, Graz.

Vassilis Lyberatos & Spyridon Kantarelis

Vassilis Lyberatos is a PhD student at NTUA's Artificial Intelligence and Learning Systems Laboratory. He specialised in Computer Science at the Department of Electrical and Computer Engineering at NTUA. His research interests include explainable AI, machine learning, knowledge and graph representation, music information retrieval, neuroscience and other related fields. He participated in European and Greek AI projects and completed a research residency at DTU Compute. He also supports teaching and serves as a reviewer at international conferences and journals.

Spyridon Kantarelis is a PhD student at NTUA's AI and Learning Systems Lab. His bachelor thesis was "Ontology and algorithm development for harmonic analysis of musical chord progressions". His current research revolves around the applications of Artificial Intelligence in the field of music, especially automatic music analysis using symbolic music data. His research interests include Music Information Retrieval, Knowledge Representation and Reasoning and Ontology Engineering. He studied advanced music theory and accordion at the National Conservatory of Athens.

Article topics

Article translations are machine translated and proofread.

Artikel von Vassilis Lyberatos

Vassilis Lyberatos

Vassilis Lyberatos  Spyridon Kantarelis

Spyridon Kantarelis